sel4 Notes

First, What the Hell is seL4?

So here’s the elevator pitch:

seL4 is a microkernel that’s so small, so secure, and so deterministic, you could literally use it to fly a drone, run a pacemaker, or control a satellite—with mathematical proof that it won’t screw up.

It’s not your average Linux or Windows-style kernel. It’s minimalist, formal-verification-friendly, and capability-based. That last one is key—more on that soon.

Big Picture: How Most Kernels Work

Traditional kernels like Linux or Windows? They’re monolithic:

- Big codebase (millions of lines).

- Everything runs in kernel space: scheduler, drivers, filesystem, network stack.

- If any part crashes or misbehaves, your whole system is toast.

They prioritize performance and features. But they sacrifice isolation and guarantees.

Now think about it—if you’re building a critical system (nuclear reactor? medical robot?), you can’t afford uncertainty or bugs. That’s where seL4 walks in.

Enter seL4: The Hardcore Minimalist

Microkernel Philosophy

-

seL4 strips the kernel down to the bare minimum:

- Scheduling

- IPC (Inter-Process Communication)

- Virtual memory

- Capability system

-

Everything else? User space. Filesystems, device drivers, networking—all pushed out.

If it’s not about safe and fast resource management, it doesn’t belong in seL4.

Capabilities Instead of Permissions

Here’s where it gets spicy.

In Linux, processes ask the kernel:

“Can I read /etc/passwd? Can I send a signal to PID 1234?”

seL4 flips the model:

“You don’t ask permission. You either have the capability, or you don’t even know the resource exists.”

Capabilities are unforgeable references to kernel objects (like threads, memory, endpoints). If your process doesn’t have a capability, it’s like the thing literally doesn’t exist. Perfect for security isolation.

Formal Verification: Bug-Proof by Math

Here’s the biggest flex:

seL4 is the first ever OS kernel that’s been mathematically proven to be correct.

Yeah. Like… they wrote math proofs that say:

- This pointer will never be NULL.

- This buffer will never overflow.

- This syscall will always behave as defined.

Compare that to Linux: “Well, if it crashes, we’ll patch it next week.”

seL4 is more like:

“We know it can’t crash—because theorems.”

How seL4 Works: Startup to Runtime

Let’s imagine the system boots:

- Bootloader hands off control to

init_kernel(). -

Kernel sets up:

- Kernel page tables

- Memory regions

- IRQs

- Capability space (CNode)

- It creates the root thread with a small set of initial capabilities (like memory, IPC endpoints).

-

After that, the kernel is basically hands-off.

- All system management (creating threads, mapping memory) happens in userspace, using system calls gated by capabilities.

It’s like the kernel is a hyper-paranoid concierge:

“You can only do what you’ve been explicitly allowed to. And I’m not gonna help unless you show me a valid keycard (capability).”

What’s Different vs Linux / Windows?

| Feature | seL4 | Linux / Windows |

|---|---|---|

| Kernel Type | Microkernel | Monolithic |

| Security | Capability-based (zero ambient authority) | Permissions model (UID/GID, ACLs) |

| Verification | Formally verified | No formal proofs |

| Size | ~10K LOC | 20M+ LOC |

| Failure Domain | Small (isolated apps) | Whole system crash risk |

| Performance | Near-native (crazy optimized IPC) | High, but risk of bugs |

| Dev Model | Minimal TCB, build everything yourself | Batteries included (with bugs) |

Real Talk: Why Would You Use seL4?

If you’re building a:

- Medical device

- Spacecraft

- Secure smartphone

- Military drone

- Blockchain hardware wallet

…and you need to prove your OS won’t panic, leak memory, or let malware sneak in?

You use seL4.

But if you’re building a web server or gaming laptop? Stick with Linux—seL4 is not here to replace your desktop OS (yet).

TL;DR Like You’re 5

- Most OSes are big janky castles with guards at the gates.

-

seL4 is a tiny, hyper-disciplined vault that says:

“I’ll only open this drawer if you show me the exact key, and I’ll mathematically prove I’ll never open the wrong one.”

It’s clean, correct, secure, and scary-smart.

Now why the hell i am doing this, well because i am upto something thats true, we (someone and i) are trying to build something crazyy :D

TL;DR: For MicroBPF (now hypOS) — start with seL4 as your base. Don’t reinvent the kernel. Yet.

Building the BPF execution + syscall plumbing on top. Focus your genius where it matters.

What’s MicroBPF (now hypOS) Trying to Do Again?

You’re building:

- A secure unikernel-like OS for embedded or IoT.

- Runs custom apps / BPF logic in an isolated, sandboxed way.

- Uses eBPF to plug in logic without modifying the core system.

- Probably aims for real-time, deterministic, tamper-proof execution.

Option A: Use seL4 as Base

Why it’s 🔥 for MicroBPF:

| Feature | seL4 Benefit |

|---|---|

| Isolation | Capabilities + separate address spaces = pure sandboxing. Perfect for running untrusted eBPF logic. |

| Minimal | 10K LOC kernel. No bloat. Ideal for embedded. |

| Formally verified | We can confidently expose APIs to BPF. No weird kernel crashes due to rogue plugins. |

| Policy-as-code | we could enforce execution limits, syscall limits, memory caps on BPF programs without needing a “security module.” It’s built in. |

Risks:

-

We’ll need to build your own:

- BPF VM or loader

- ELF loader (if BPF code is compiled separately)

- Minimal syscall interface

- Sandboxing engine (or reuse camkes)

- Tooling is mid. we’ll probably need to write some Rust/C shims.

- Debugging IPC/Caps = 🧠 pain.

BUT: This pain is in the exact area we want to specialize in. So it’s worth it.

Option B: Use Zephyr, Tock, or Fuchsia

Zephyr:

- Real-time, supported on tons of boards, good driver support.

- Not formally verified. BPF integration is non-trivial.

- Threading model is messy if we want capability-style sandboxing.

Tock (Rust embedded OS):

- Everything is a capsule. Secure by design.

- No BPF support out of the box. Sandbox model is harder to map to a BPF VM.

Fuchsia (Google’s microkernel):

- Super modern. Comes with its own VMO model, Sandboxing, Handles.

- MASSIVE. Not good for low-resource hardware. Not easily portable to tiny IoT boards.

Option C: Build your own kernel from scratch

- It will take us a year to match what seL4 gives us in week 1.

- We’ll spend time debugging schedulers and memory paging instead of building BPF logic.

- Fun? Yes. Strategic for MicroBPF? Not yet.

Maybe v3 of MicroBPF, when we know exactly what kernel we want, we write your own.

Now

1. eBPF Integration into seL4

What seL4 is Missing:

- No dynamic code execution engine.

- No JIT or interpreter infrastructure.

- No in-kernel programmable logic.

What We Need to Do:

- Embed an eBPF interpreter (initially) into a userspace process with a capability to perform syscall-like interactions (safe I/O, memory access, IPC).

- Later phases: Consider building a minimal in-kernel eBPF VM that runs inside a separate kernel thread or isolated core (very tricky, see “TCB growth” note below).

Required Changes:

- Create a BPF loader mechanism using seL4 IPC or shared memory from a config agent.

- Write a verifier for the subset of eBPF We support (bounded loops, no heap alloc, memory-safe stack-only access).

- Define seL4 helper syscalls or capability types that eBPF programs can invoke safely.

2. Dynamic Runtime Extensions

What seL4 is Missing:

- Kernel is static: no concept of injecting logic post-boot.

- No dynamic reconfiguration model.

What WE Need to Do:

-

Run a privileged “control plane agent” in user space that:

- Loads BPF extensions

- Configures routing policies or metrics

- Uses capabilities to communicate with drivers or data-plane agents.

Required Changes:

- Build a nanopb + protobuf schema parser in userspace.

- Extend seL4’s bootinfo or IPC setup to include config channels to agents.

- Introduce a privileged capability class for agents allowed to inject/update BPF programs.

3. Unikernel Deployment + Build System

What seL4 is Missing:

- General-purpose apps typically run with CAmkES or custom C startup code.

- Not optimized for Unikraft-style build pipeline.

What We Need to Do:

-

Develop a custom build system (Yocto-style or Nix-style) to generate:

- Minimal seL4 kernel

- Statically linked app with eBPF loader/agents

- Flattened bootable binary image

-

Ensure each deployment links only what is needed.

Required Changes:

- Fork or modify CMake/Ninja flow for seL4.

- Strip unused CAmkES bits or use libsel4 + libsel4runtime directly.

- Integrate LTO, dead code stripping, and optionally LLVM-based BPF JIT pipeline.

4. MicroBPF-Specific Capability Types

What seL4 is Missing:

- No semantics for eBPF-safe objects (maps, helpers).

- Capabilities are coarse-grained and static.

What we Need to Do:

-

Define custom capability types for:

- BPF maps (array, perf counters, ring buffer).

- Hook points (i.e. “observe this IPC endpoint” or “hook this memory region”).

Required Changes:

- Add new object types to

objecttype.c,structures.h, and object model. - Extend

deriveCap(),provide_cap()logic for custom cap behaviors. - Modify

sel4.xmlto expose new syscall interfaces, if needed.

5. Boot Time Optimization

What seL4 is Missing:

- Base seL4 boots fast, but once we add CAmkES and other init code, it slows down.

What we Need to Do:

- Use hand-rolled

initapp, no CAmkES, no dynamic linking. - Preload all cap tables and untyped memory pools at compile time (no dynamic allocation).

Required Changes:

- Use

init_kernel()as-is, but customize our root server creation path. - Hardcode our “BPF control plane agent” and its memory map into the final image.

6. Instrumentation and Observability

What seL4 is Missing:

- No kernel metrics, logging, or counters.

- No in-kernel trace points.

What We Need to Do:

-

Add instrumentation hooks inside:

- IPC

- TCB scheduler

- Memory faults

-

Export these hooks as BPF events, or memory-mapped counters.

Required Changes:

- Define a new struct in

structures.hfor per-core/per-thread metrics. - Modify scheduler/IPC code paths to optionally invoke BPF trace helpers.

- Map shared metric regions to privileged userspace (e.g. via

provide_cap()).

7. Optional: Formal Verification for Our Changes

What seL4 Already Has:

- Full proof stack (Isabelle/HOL) for vanilla kernel.

Challenge:

- Any change we make in core seL4 (especially eBPF runtime in-kernel) invalidates existing proofs.

What we Could Do:

- Keep eBPF logic in userspace (initially), and limit kernel changes to observable state only.

- Later: Write separate formal models of our BPF verifier or sandbox using Coq/Isabelle.

Bonus: Simplified Phased Map

| Phase | Goal | seL4 Impact |

|---|---|---|

| Phase 1 | Static unikernel + BPF in userspace | No kernel mods. Custom userspace loader + cap layout |

| Phase 2 | Dynamic config + OTA via Protobuf | IPC-based control agent, minor kernel changes |

| Phase 3 | Signed BPF + attestation | Add capability for attestation agent + cap filtering |

| Phase 4 | Verified BPF verifier | Separate Coq/Isabelle module, or deductive verification |

| Phase 5 | Distributed RISC-V federation | seL4 unchanged; build custom comm layer in userland |

Summary: What we’re Turning seL4 Into

We’re evolving seL4 into a “formally verified kernel substrate” for an eBPF-based secure unikernel runtime:

- Replaces dynamic OS logic with provable, in-kernel scripts.

- Enables hot-swappable behavior in embedded systems.

- Preserves seL4’s guarantees (if we modularize carefully).

Think: NixOS meets eBPF meets aerospace-grade OS kernel.

seL4 Microkernel Architecture Overview

well this is for crazy people (this is what i understand and have read - my interpretation could be wrong)

Purpose and Scope

This part of document provides a technical overview of the seL4 microkernel architecture, covering its core subsystems, initialization process, and fundamental abstractions. It focuses on the kernel’s internal structure, major components, and their interactions during system startup and runtime operation.

For user-space API and application development, see the API documentation. For architecture-specific implementation details, refer to the platform-specific documentation.

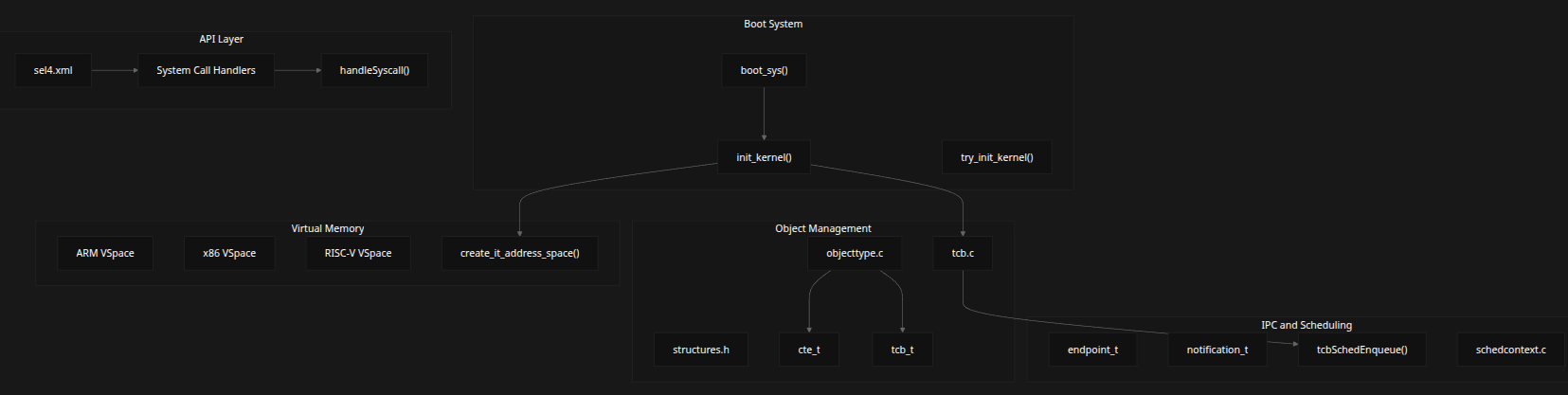

High-Level System Architecture

The seL4 microkernel consists of several major subsystems that work together to provide a minimal, capability-based operating system kernel:

- IPC and Scheduling

- Virtual Memory

- Object Management

- Boot System

- API Layer

sel4.xml-

System Call Handlers

handleSyscall()init_kernel()boot_sys()try_init_kernel()

-

Core files:

tcb.cobjecttype.cstructures.h

-

Key Data Types:

cte_ttcb_t

-

Architecture-specific VSpaces:

- ARM VSpace

- x86 VSpace

- RISC-V VSpace

-

Key Functions:

create_it_address_space()endpoint_tnotification_ttcbSchedEnqueue()

Sources:

src/kernel/boot.c: lines 1–1000src/object/tcb.c: lines 1–100src/object/objecttype.c: lines 1–100include/object/structures.h: lines 1–300libsel4/include/interfaces/sel4.xml: lines 1–100

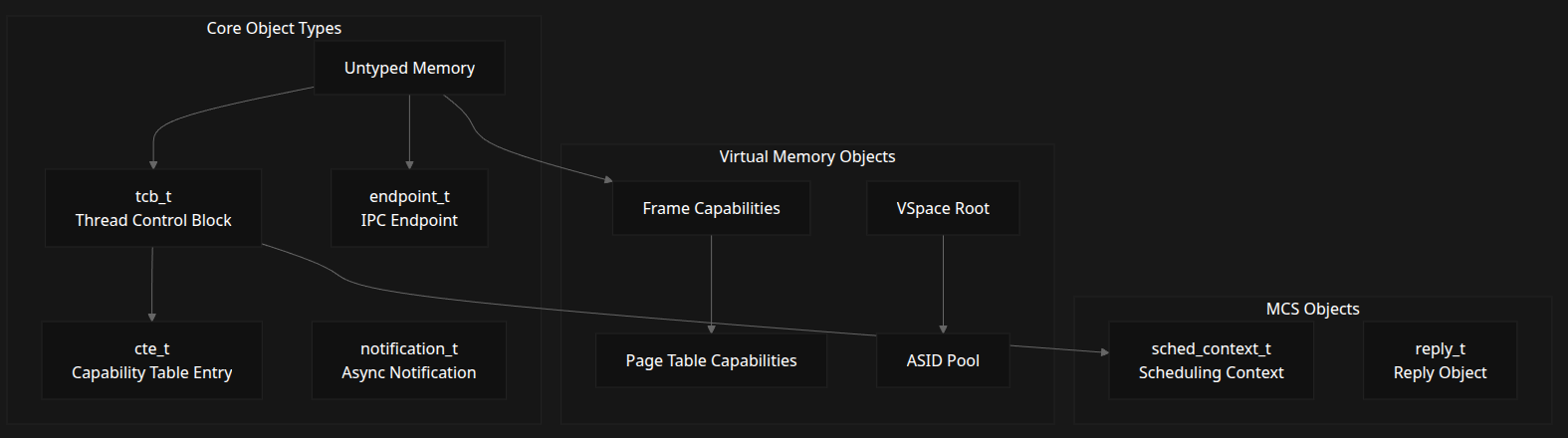

Core Kernel Objects and Data Structures

The seL4 kernel is built around several fundamental object types that represent different system resources and abstractions:

Object Categories

- MCS Objects

- Virtual Memory Objects

- Core Object Types

Important Types

| Type | Description |

|---|---|

tcb_t |

Thread Control Block |

cte_t |

Capability Table Entry |

endpoint_t |

IPC Endpoint |

notification_t |

Async Notification |

| Untyped Memory | Memory without specific type |

| Frame Capabilities | For memory pages |

| Page Table Capabilities | VSpace navigation |

| ASID Pool | Address space ID pool |

| VSpace Root | Root of virtual memory tree |

sched_context_t |

Scheduling Context |

reply_t |

Reply Object for IPC |

Thread Control Block (tcb_t)

Represents a thread’s execution context:

- Scheduling info: Priority, domain, scheduling context pointer

- Capability space: Root CNode (

tcbCTable) - Virtual memory: VSpace root (

tcbVTable) - IPC buffer: Pointer to IPC buffer (

tcbBuffer) - Arch-specific: Register and FPU state

Capability Table Entry (cte_t)

Each cte_t contains:

- A

cap_t(capability) - Metadata for derivation tracking (

cteMDBNode)

Sources:

include/object/structures.h: lines 154–158src/object/tcb.c: lines 1–50include/object/tcb.h: lines 1–50

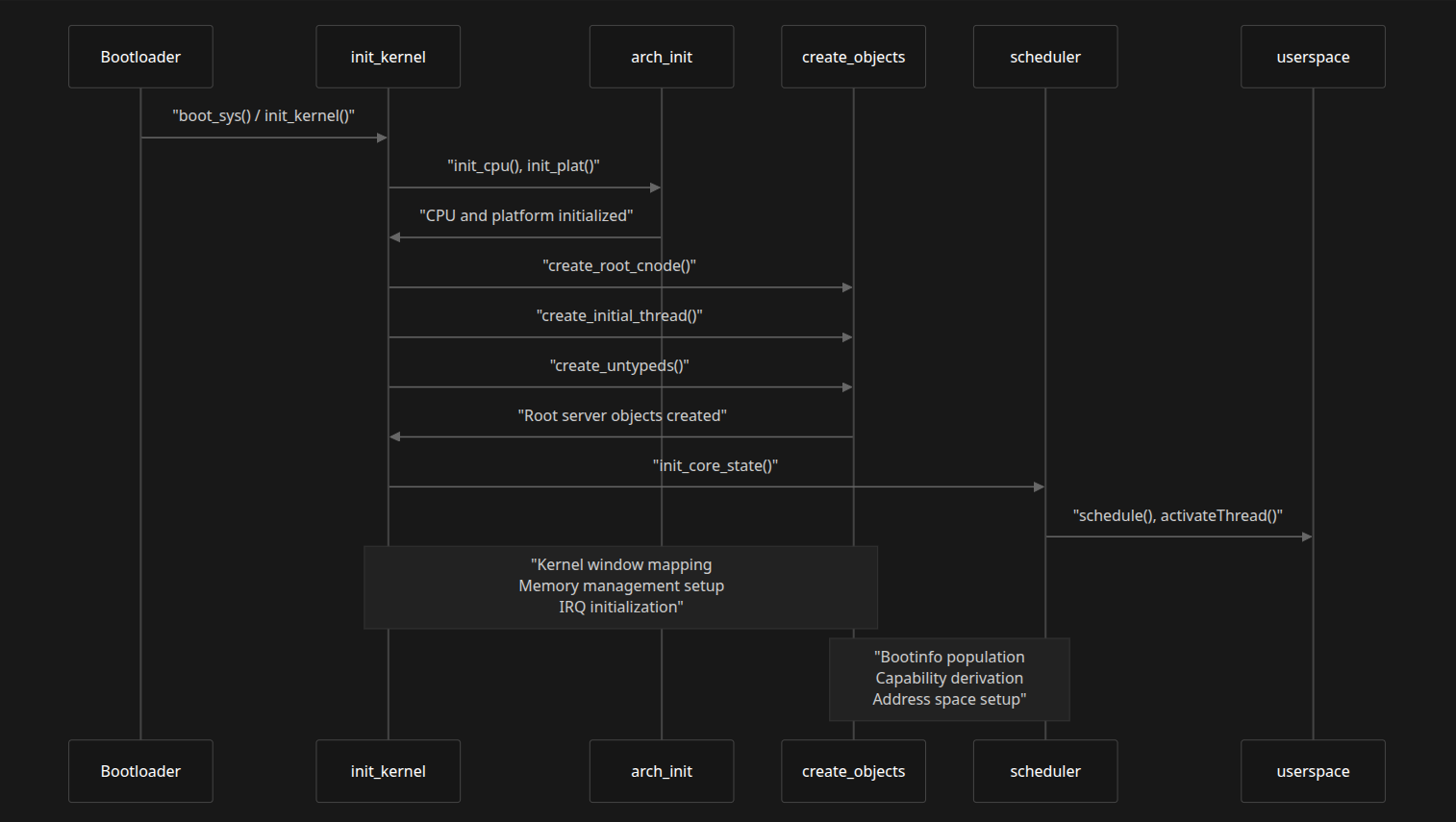

System Initialization Flow

The kernel initialization follows a multi-stage process:

Key Stages

| Stage | Responsibilities |

|---|---|

| Kernel window mapping | Setup of virtual memory |

| Memory management setup | Memory regions, frame caps |

| IRQ initialization | Interrupt handling setup |

| Bootinfo population | Create BootInfo frame |

| Capability derivation | Initial caps to RootServer |

| Address space setup | VSpace and ASIDs initialized |

| Object creation | create_root_cnode(), create_initial_thread(), create_untypeds() |

| Core state init | init_core_state(), schedule(), activateThread() |

Key Functions

| Function | Purpose | Location |

|---|---|---|

init_kernel() |

Main kernel entry point | src/arch/*/kernel/boot.c |

init_cpu() |

Arch-specific CPU setup | src/arch/*/kernel/boot.c |

map_kernel_window() |

Kernel memory setup | src/arch/*/kernel/vspace.c |

create_root_cnode() |

Initial cap space | src/kernel/boot.c (277–290) |

create_initial_thread() |

Root server thread | src/kernel/boot.c (493–569) |

init_freemem() |

Memory allocator init | src/kernel/boot.c (931–1014) |

Sources:

src/kernel/boot.c: 493–677src/arch/arm/kernel/boot.c: 334–677src/arch/x86/kernel/boot_sys.c: 703–735src/arch/riscv/kernel/boot.c: 456–504

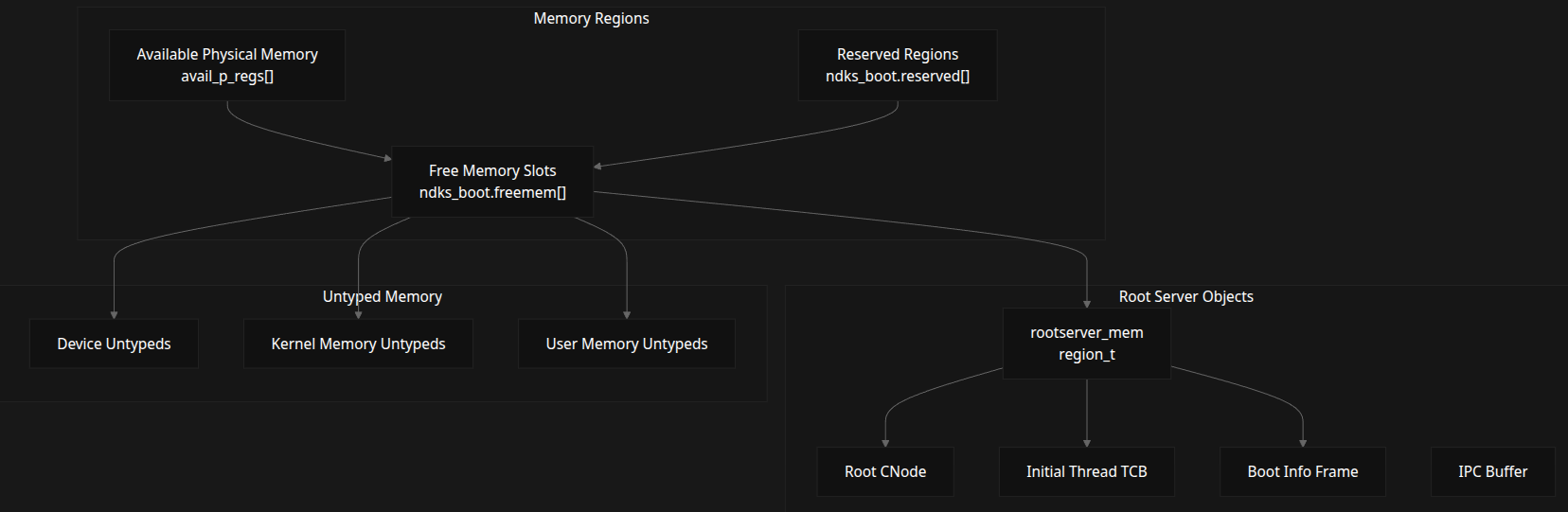

Boot Memory Management

During boot, seL4 sets up memory tracking and allocation using:

- Untyped Memory

- Root Server Objects

- Memory Regions

Memory Structures

| Name | Purpose |

|---|---|

avail_p_regs[] |

Available physical memory |

ndks_boot.reserved[] |

Reserved regions |

ndks_boot.freemem[] |

Free slots for allocator |

rootserver_mem |

Region for root objects |

| Root CNode | Capability space |

| Initial Thread TCB | First thread |

| Boot Info Frame | Metadata for userspace |

| IPC Buffer | IPC space for root thread |

| Device Untypeds | Device memory |

| Kernel/User Untypeds | Allocatable memory |

Memory Functions

init_freemem(): Setup free regionsalloc_rootserver_obj(): Allocate memory for root servercreate_untypeds(): Create untyped capsreserve_region(): Exclude regions from use

Sources:

src/kernel/boot.c: 46–117src/kernel/boot.c: 155–189src/kernel/boot.c: 767–831src/kernel/boot.c: 931–1014

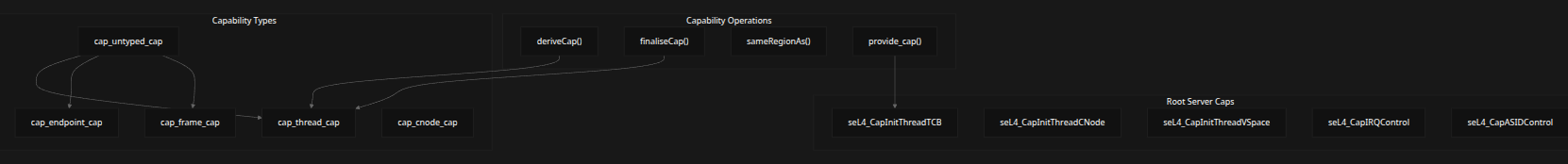

Capability System Architecture

The seL4 kernel uses capabilities for fine-grained access control.

Key Concepts

- Root Server Caps

- Capability Types

-

Operations:

deriveCap()finaliseCap()sameRegionAs()provide_cap()

Capability Types

cap_untyped_capcap_thread_capcap_endpoint_capcap_frame_capcap_cnode_cap

Initial Capabilities (Well-known)

seL4_CapInitThreadTCBseL4_CapInitThreadCNodeseL4_CapInitThreadVSpaceseL4_CapIRQControlseL4_CapASIDControl

Sources:

src/object/objecttype.c: 62–102, 104–262libsel4/include/sel4/bootinfo_types.h: 14–32src/kernel/boot.c: 371–382

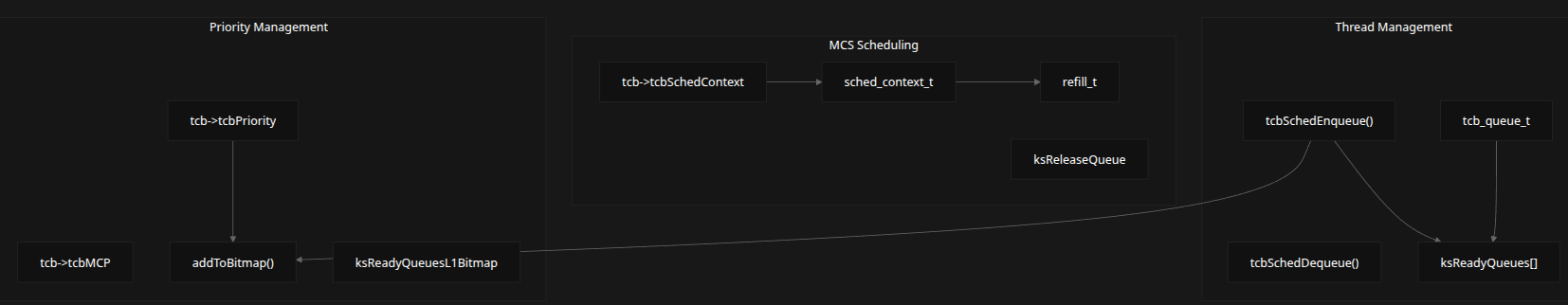

Thread and Scheduling System

seL4 uses a priority-based preemptive scheduler, with support for Mixed Criticality Scheduling (MCS).

Components

-

Thread Management

tcb_t,tcbSchedEnqueue(),tcbSchedDequeue()

-

Priority Bitmap

addToBitmap(),ksReadyQueues[],ksReadyQueuesL1Bitmap

-

Scheduling Context

sched_context_t,refill_t,tcb->tcbSchedContext

Functions

| Function | Purpose | Behavior |

|---|---|---|

tcbSchedEnqueue() |

Add thread to ready queue | Uses priority-based bitmap indexing |

tcbSchedDequeue() |

Remove thread | Updates bitmap if queue empty |

schedule() |

Pick next thread | Highest priority wins |

switchToThread() |

Context switch | Architecture-specific |

Sources:

src/object/tcb.c: 52–79, 115–141, 173–197src/object/schedcontext.c: 1–50